Python is not really known as a language for servers. However the community simply refuses to die down and will force fit python into everything. In the pythonic way, great libraries are written to support all kinds of functionalities. Today am going in a rabbit hole to figure out the way to do asynchronous programming with multi-threading in python.

The consensus to manage multi-processing and async programming in python is to use celery, an open source library. I am currently in the middle of invoking celery to run a compute intensive process involving millions of database records. A learning tip I follow is to replicate whatever complex stuff am going to do on a large program in a simple setup. This helps to easily migrate all learnings in the large programs once the simple setup is working. As they say, the best way to eat an elephant is a bite at a time!

Hence will be dividing this tutorial in the following parts

- Introduction to Celery and a simple application which should run a few functions in multi-threads or processes

- Incorporate celery in a simple Django server and run the same functions from Django while the web server is running

- Introduce Celery Beat or scheduled programming similar to cron jobs

- Finally run this celery and django combination on a slightly more compute intensive project which we call as capstone project

The capstone project is the following

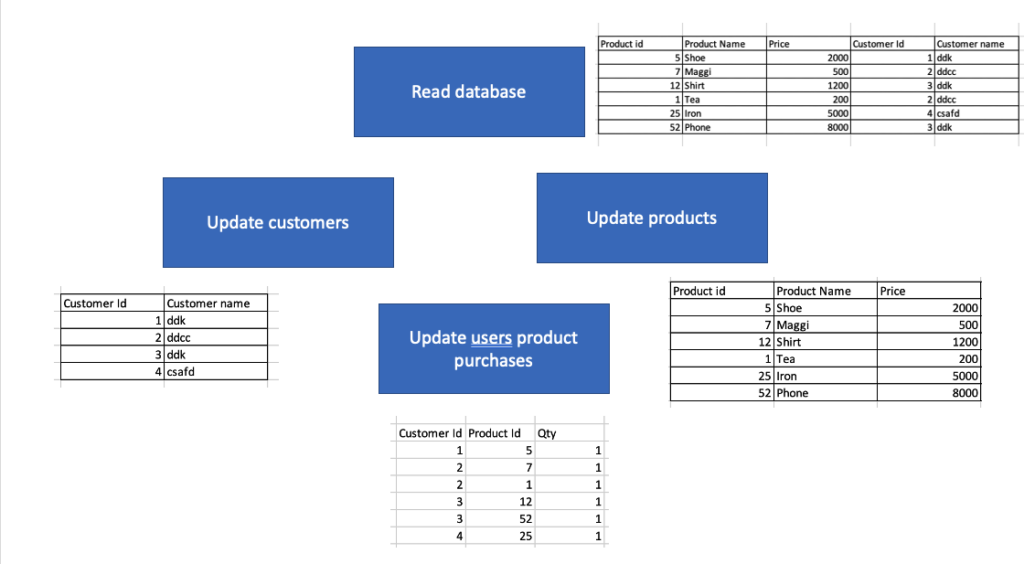

We have an ecommerce website with users and products, every hour thousands of products are purchased (the owner is a happy man). I have a steady stream of purchase data flowing in for products and their customers. I want to separate them out in separate product and customer records and a product customer purchase database. Something like the figure below (not a great example I know however should suffice to explain what I am doing

Now as we see, the update customers and update products can run independently of each other and hence are parallel. Also for each of update customers and products function, I should be able to spawn multiple threads which read from the database concurrently

Lets move on to the first part that is setting up celery in a single file